Have you ever wondered why some queries feel instant while others crawl for minutes?

This short guide shows how record layout on disk controls speed, cost, and scalability. You’ll see how different layouts map records to blocks and buckets, and how that affects selection, insertion, deletion, and updates.

Understanding this link between the table view and the physical layout helps you pick the right approach for your workload. Good placement reduces duplicates, cuts storage waste, and slashes I/O for common queries.

We’ll walk through the common types — sequential (pile and sorted), heap, hash, ISAM, and B+ tree — and explain cluster layouts, spanned vs. unspanned records, and bucket addresses. By the end, you’ll have a checklist to match your data and operations to the best layout.

What you’ll learn about file organization in DBMS today

Which layout makes your common queries fast and your maintenance predictable?

You’ll see clear goals: faster selection of records, simpler insert/delete/update operations, fewer duplicates, and lower storage cost. These benefits translate to less I/O, fewer full scans, and smoother concurrency.

Next, you’ll meet the major types—sequential, heap, clustered, ISAM, hash, and B+ tree—and learn when each type fits best. Which one handles bulk loads? Which gives O(1) lookups? Which supports range scans? You’ll get practical answers.

- Map how each layout supports fast access for the records you query most.

- Pick types for bulk loads, direct lookups, range scans, or heavy updates.

- Understand maintenance: overflow areas, reorganization triggers, and key metrics to watch.

- Walk away with quick heuristics—heap for ingest, hash for direct keys, B+ tree for ranges—and their caveats.

Fundamentals: records, files, and physical storage

What happens when a record lands on a disk block—does it stay neat or span across pages?

Think of your database as a set of files split into fixed-size data blocks. Each block holds several records. The block is the unit your disk and cache move, so minimizing how many blocks you touch speeds queries.

How records map to data blocks and buckets

Every record has a home slot on a block. When hashing is used, a hash function converts a key into a bucket address. That bucket points to one block or a group of blocks where the record lives.

Spanned vs. unspanned records

Unspanned records fit entirely within one block—fewer reads, less complexity. Spanned records cross block boundaries. Spanning can store large rows but raises I/O and wasted space.

Why the method impacts access, insertion, and updates

Choice of method decides whether you jump straight to a block or scan many. Insert-heavy workloads prefer low-friction placement. Read-heavy range queries need sorted layouts. Updates may touch one bucket or force reorganization.

- Blocks, not rows, move on disk—optimize block touches.

- Buckets (via hash) give direct hits; sorted layouts aid ranges.

- Good mapping cuts redundancy and speeds detection of duplicate records.

| Aspect | Unspanned | Spanned |

|---|---|---|

| Read cost | Low (single block) | Higher (multiple blocks) |

| Space use | Efficient | Possible waste |

| Best for | Short records, OLTP | Large rows, BLOBs |

Sequential file organization: simple order, predictable access

Imagine records stacked like books on a shelf—what does that buy you for big scans? Sequential layouts place each record one after another, so reading many rows is fast and predictable.

Pile file method: inserted at the end of the file

The pile approach appends each new record at the end file. Writes are cheap and simple because a new row is just inserted end file. But lookups often need a full traversal—search time is O(n).

Sorted file method: based on a primary key or key value

A sorted file keeps records in a defined order by primary key or another key value. This enables binary search and O(log n) reads. The trade-off: maintaining order after inserts or deletes costs CPU and I/O.

Typical operations and time costs

- Pile scans: linear traversal, O(n).

- Sorted reads: binary search, O(log n); writes require repositioning or re-sort.

- Best fit: batch processing, reports, or tape-like workflows.

Where sequential files shine and where they struggle

Strengths: predictable sweeps and low-cost bulk reads.

Weaknesses: slow random access and growing maintenance when many small updates mix with reads.

Heap file organization: fast bulk loads, unordered storage

When you need fast ingest and don’t care about sort order, a heap file keeps writes cheap and simple.

The method appends new rows and places each record into any available data block the system picks. If a block fills, the next record is routed to another block. That means inserts are quick and often done by adding at the end.

How new records are inserted

New records are usually appended or placed into free slots. This avoids maintaining order and makes bulk loads fast.

Trade-offs: scans, space, and scattering

Because there’s no order, searches, updates, and deletes often scan the whole file. Over time, unused slots and fragmented blocks waste space and increase I/O.

- Pros: very fast inserts, ideal for initial loads and heavy ingestion.

- Cons: full scans when a row must be found; related rows may be scattered across pages.

- Pairing a heap with targeted indexes can reduce how often the entire file is accessed.

| Characteristic | Heap file | Best use |

|---|---|---|

| Insert cost | Low (append/slot) | Bulk loads, ingestion |

| Lookup cost | High (scan) | Rare point queries |

| Space behavior | Fragmentation possible | Periodic reorganization |

Hash file organization: O(1) lookups with a hash function

Can a tiny computation skip whole scans and land you on the exact block you need? That’s the promise of a hash file organization.

You apply a hash function to a key value—often the primary key—and it returns a bucket address. The system then reads or writes the target block directly, so basic operations like insertion, search, update, and deletion are near O(1) when the table is well-sized.

Collisions and overflow handling

Different keys can map to the same bucket. You resolve this by chaining, overflow blocks, or open addressing. Each approach trades simplicity for extra I/O or more complex bookkeeping.

Practical notes and caveats

Strength: blazing equality lookups without scanning the entire file—ideal for high-volume OLTP.

- Plan for rehashing or extendible hashing as growth changes bucket loads.

- Monitor load factor and bucket distribution to avoid long chains.

- Not suitable for range queries—buckets are unordered, so scans are expensive.

| Aspect | Hash file | Best fit |

|---|---|---|

| Lookup cost | Near O(1) | Point lookups by key |

| Range support | Poor | Use B+ tree instead |

| Space behavior | Uneven if skewed | Watch bucket load |

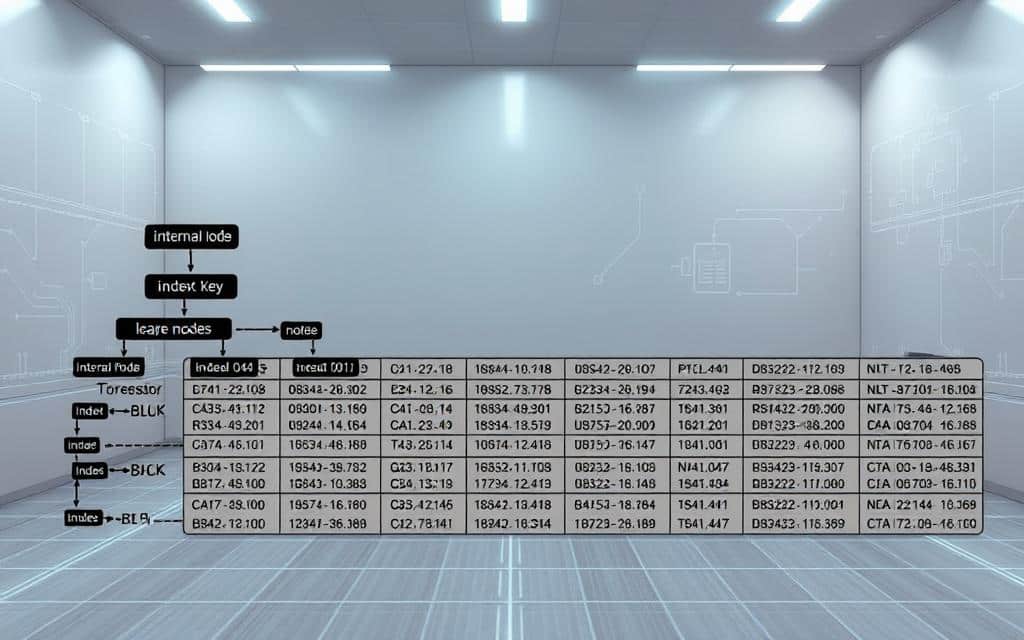

Indexed approaches: ISAM and B+ tree for balanced access

Want fast range queries without rebuilding your whole storage every time data grows?

ISAM stores a sequential main area with a static primary index and routes new inserts to overflow zones. Reads are very fast because the main part stays sorted. But when overflow chains lengthen, you schedule periodic merges to restore performance.

How B+ trees differ and why they adapt

B+ trees keep keys in internal nodes and put actual records at leaf pages. The structure stays balanced, so inserts and deletes adjust local nodes rather than rebuilding the whole index. Sibling pointers make range scans quick and predictable.

When you pick which method

Both approaches speed random access and range queries, but they add space overhead and write maintenance. Use ISAM for read-mostly reporting when you can tolerate occasional reorganizations.

- B+ trees: dynamic growth, fast ranges, good for mixed workloads.

- ISAM: simple index, cheap reads, needs periodic maintenance.

- Indexes: dense vs. sparse choices affect lookup cost and how the records file is accessed.

| Aspect | ISAM | B+ tree |

|---|---|---|

| Growth handling | Periodic merges | Automatic balancing |

| Range scans | Good | Excellent |

| Maintenance | Higher on insert-heavy | Moderate, localized |

Clustered file organization: storing related records together

When related rows live near one another, joins can run with far fewer disk reads.

Clustered layouts place related records for joined tables into the same block ranges. That reduces random I/O and improves cache locality for common queries.

Clustered keys and hash clusters

You can cluster by a clustered key—often a field based primary key—or use hash clusters to group rows that share the same hash of that key.

When frequent joins and 1:M relationships benefit

This method shines for one-to-many joins—like courses and students or customers and orders. Fewer blocks are read and records file accessed during joins drops significantly.

- Benefit: lower I/O and faster join performance for recurring patterns.

- Trade-off: choosing the clustered key, handling growth, and skewed distribution add operations overhead.

- Pick: indexed clusters for ordered range access; hash clusters for equality-heavy joins.

| Aspect | Indexed cluster | Hash cluster |

|---|---|---|

| Best for | Range scans | Equality joins |

| Maintenance | Moderate | Higher with rehashing |

| Storage behavior | Ordered records stored | Grouped by hash |

Choosing the right file organization method for your database

Does your workload favor bulk loads, point lookups, or long range scans? That single answer guides which organization method will cut I/O, lower costs, and simplify maintenance.

Decision factors: data size, access patterns, and operations

Start by measuring your query mix and update rate. Large, growing datasets amplify any design flaws.

Pick by pattern: equality-heavy workloads need direct access. Range queries reward ordered layouts. Heavy ingest benefits from append-friendly methods.

Common matches: quick heuristics to choose a method

- Heap: best for bulk loads and fast writes; pair with selective indexes for occasional lookup.

- Hash: ideal for direct key access at scale; avoid for range scans.

- B+ tree: balanced—great for ranges, sorting, and partial-key searches.

- ISAM: suits read-heavy sequential access with planned merges for overflow.

| Goal | Best types | When to pick | Trade-off |

|---|---|---|---|

| Bulk ingest | Heap | High insert rate, batch loads | Costly point lookups |

| Direct key lookup | Hash | Equality queries, low range needs | Poor range support |

| Range & sort | B+ tree | Reporting, partial-key searches | Index maintenance overhead |

Best practices for operations, storage, and space efficiency

How do you keep growth from turning fast lookups into long waits?

Start with strict keys and pre-insert validation. Enforce uniqueness to stop duplicate records before they arrive. That keeps storage lean and reduces later cleanup work.

Minimizing redundancy and avoiding duplicate records

Validate every insertion new request against indexes and constraints. Reject duplicates at the client or service layer. Periodic dedupe sweeps recover lost space and restore query speed.

Balancing index overhead with query performance

Indexes speed reads but slow insertion and updates. Keep only needed indexes, and measure how each extra index affects write latency and operations cost.

Planning for growth: rehashing, reorganization, and maintenance

Monitor bucket load factors for hash structures and plan rehash before chains lengthen. Schedule reorganizations for sequential areas and merge ISAM overflow to restore locality.

- Tip: When a new record arrives, check page fill and fragmentation to avoid reads that touch extra blocks.

- Tip: Tune B+ tree node fill factors to reduce splits and preserve fast ranges.

| Practice | Benefit | Cost |

|---|---|---|

| Enforce keys | Fewer duplicates, cleaner data | Validation overhead |

| Limit indexes | Faster writes, simpler maintenance | Slower point queries |

| Monitor hash load | Stable O(1) lookups | Rehash work at scale |

| Scheduled reorgs | Restored locality and less wasted space | Planned downtime or throttling |

For deeper guidance on indexing and how it impacts where a file is accessed, see our indexing guide. Apply these rules and your operations will stay efficient as the system grows.

Wrap-up and next steps for mastering organization methods

Ready to turn these concepts into an actionable plan for your systems?

Start by profiling which queries touch the most blocks and which records get read or written most. Match each table to a suitable file organization—heap for fast bulk insertion, hash for O(1) key lookups with a solid hash function, and B+ tree for ordered ranges and sorts.

Use ISAM when reads dominate but schedule merges to control overflow. Cluster related rows to cut reads during joins. Plan rehashing and periodic reorganization as data grows.

Next steps: map key tables to a method, set SLAs for insertion and query latency, and monitor blocks, chains, and space. Small, regular maintenance keeps your database fast and predictable.