Have you ever wondered how a SQL Server brings data back after a crash—fast and intact?

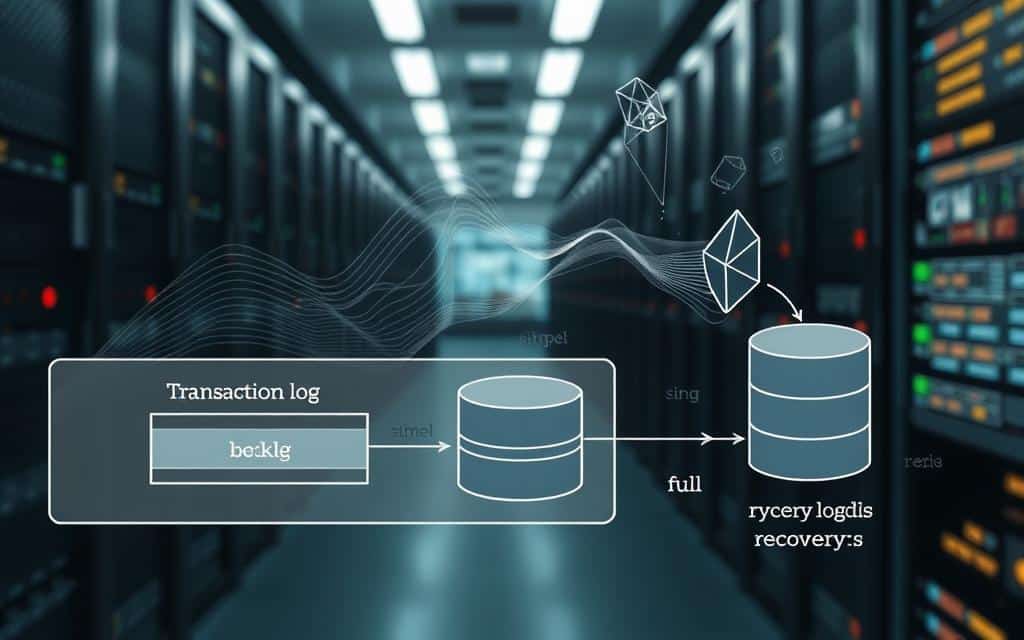

The transaction log in databases is the answer: a sequential record that captures every change so the server can undo or replay work during recovery.

Think of this file as the system’s black box. It stores entries for each operation, helps roll forward committed work, and rolls back incomplete actions after a failure.

Why this matters: without a healthy log file and regular backup routines, you may not meet recovery goals or support high-availability features like mirroring, log shipping, or Always On groups.

This section sets the stage—next you’ll learn how the server writes these records, how truncation reclaims space, and how to manage backups so you never lose critical data.

Why the Transaction Log Matters for Data Integrity and Recovery

How does SQL Server restore precise changes after an outage? A sequential record does the heavy lifting.

Durability and ACID—SQL Server uses write‑ahead logging so a log record is written before its data page hits disk. That guarantees committed changes survive a crash and keeps your data consistent.

Crash recovery uses those records to replay committed operations and undo incomplete ones when the server restarts. If you must reverse a bad change, a ROLLBACK walks the same records to undo work cleanly.

Point‑in‑time recovery depends on applying log backups after a full restore to roll a database forward to the exact time you need. Replication and HA/DR features also rely on continuous shipping and reading of these records so secondaries stay current.

- Roll forward and rollback: recover to a moment or undo mistakes.

- High availability: Always On, mirroring, and shipping use the record stream for near real‑time redo.

- Audit and troubleshooting: the records show what happened and when.

Prioritize backup strategy and monitoring of the file that holds these records—it’s the bridge between your last backup and the moment before failure.

Inside the SQL Server Transaction Log: Architecture and Flow

How does SQL Server organize its write stream so recovery is fast and deterministic?

Logical architecture — The engine records each change as a series of log records. Each record gets a rising LSN (log sequence number), so if LSN2 > LSN1 you know which operation happened later.

Records for the same work are chained with backward pointers. That lets SQL Server roll back a multi-step operation quickly without scanning unrelated entries.

Physical layout and VLFs

Physically, the flow lives inside one or more log files split into Virtual Log Files (VLFs). VLF count is driven by autogrowth rules—different since SQL Server 2014 and stricter in 2022 and Azure SQL.

Too many VLFs slows startup, restore, and high-availability work. If you see MSSQLSERVER_9017 or slow recovery, shrink and regrow the file with sensible FILEGROWTH to fix it.

Log blocks and WAL in action

Each VLF holds log blocks—units aligned to 512-byte sectors and up to ~60 KB. SQL Server uses write-ahead logging (WAL): it writes the record to disk before a modified page is flushed. That guarantees durability even if the disk fails.

- Active range: the segment from the MinLSN to the end cannot be truncated because it’s needed for full recovery.

- Wrap and growth: the file is circular—space is reused after truncation; otherwise it grows or raises error 9002 when full.

Recovery Models and the Transaction Log: Simple, Full, and Bulk-Logged

Do you need point‑in‑time restore or simpler maintenance? The recovery model decides that trade‑off.

Simple recovery model is low maintenance. After a checkpoint, automatic log truncation marks inactive VLFs reusable. That keeps growth in check but removes the ability for point‑in‑time restores because there are no log backups.

Full recovery model gives you the best recovery options. You must run routine log backups to enable point‑in‑time restore. After a checkpoint since the last backup, truncation occurs on the next log backup. Plan backup cadence to match your RPO or the log will grow until it consumes disk space.

Bulk‑logged recovery model is tactical. It lets many bulk operations be minimally logged to reduce write volume. That speeds large imports, SELECT INTO, and index builds but can block precise point‑in‑time recovery if a failure happens during minimal logging. Note: some replication settings force full logging even under bulk‑logged.

- Switching models changes behavior—take a full database backup before the first log backup after moving to full.

- Use bulk‑logged for ETL windows, then return to full and resume regular log backups.

- Map workloads: dev/staging = simple; OLTP = full; warehouse loads = bulk‑logged during loads.

Document model changes and backup times. If you want a checklist for planning recoveries, see our disaster recovery plan.

Plan and Size the Log File for Performance

What size should your server’s log file be to avoid painful recovery delays?

Start by estimating peak write rate and choose an initial log file size that avoids frequent growth during normal operations.

Set initial size and FILEGROWTH to avoid excessive VLFs

Create the log close to its steady-state size. That reduces the number of VLFs created by many small increments.

Set FILEGROWTH to a fixed, meaningful chunk—hundreds of MB or several GB depending on workload—rather than a small percentage.

Best practices to manage VLF count and autogrowth behavior

If you inherit a database with too many VLFs, follow a safe repair path: take a valid full backup, shrink the log to a reasonable minimum, then regrow the file in one step to the target size and set proper autogrowth.

- Watch symptoms: slow startup, long backups/restores, replication or Always On redo lag, or MSSQLSERVER_9017 warnings.

- Storage: place the log on fast, low-latency disk—sequential writes benefit from SSDs.

- Policy: document initial size and growth settings and re-evaluate quarterly as workloads change.

| Action | When to apply | Expected result |

|---|---|---|

| Pre-size log file | Before go-live or large import | Fewer autogrowth events; lower VLF count |

| Set fixed FILEGROWTH (MB) | When configuring file properties | Predictable growth; faster recovery |

| Shrink and regrow (one-step) | When fixing excessive VLFs | Restores healthy VLF layout |

| Move log to SSD | When I/O is a bottleneck | Lower latency for writes and backups |

Monitor Log Space and Keep Truncation Healthy

Are you regularly checking free log space so backups and recovery stay uninterrupted?

Start with visibility: run sys.dm_db_log_space_usage and DBCC SQLPERF(LOGSPACE) to see file size, percent used, and which database is approaching thresholds.

How checkpoints enable reuse

Checkpoints push dirty pages to disk and advance the MinLSN. Under simple recovery that lets the engine reuse inactive VLFs and control growth.

What delays truncation

- Missing a required log backup under full/bulk recovery.

- Active long-running transactions, ongoing backups or restores, or blocked replication and mirroring.

- Availability replica redo, snapshots, log scans, or in‑memory checkpoint work (XTP_CHECKPOINT).

Query sys.databases for log_reuse_wait_desc to learn the exact blocker. Remember: truncation frees space inside the file — it does not shrink the physical file on disk. Use targeted fixes (run a backup, resolve the long transaction, or address replica lag) rather than shrinking blindly.

Pro tip: set alerts at ~70% percent used and automate an on‑demand backup when LOG_BACKUP or ACTIVE_TRANSACTION appears for extended time.

Backups That Protect Your Data and Your Log Space

Which backup cadence will let you meet recovery goals and keep log space healthy?

Start with the model—under the full recovery model, routine log backups are required to enable point‑in‑time recovery and to free space inside the file.

Full, differential, and transaction log backups: the cadence

A common, pragmatic schedule is a weekly full database backup, daily differentials, and frequent log backups every 15–30 minutes.

Adjust frequency based on write volume and your RPO. If you can only lose five minutes of work, run log backup every five minutes and confirm your storage and I/O can handle it.

Point-in-time restores with log backups under full recovery

To restore to a moment, you need a recent full, optionally a differential, and every log backup up to the target time.

Remember: take a full database backup before the first log backup—only then do log backups begin truncating and protecting space.

Align RTO/RPO with backup frequency and retention

Balance RTO and RPO—more frequent differentials shorten restore time by cutting the number of log backups to apply.

- Plan for bulk windows: using bulk‑logged recovery can speed big loads but may block point‑in‑time restores during minimal logging.

- Automate verification: test restores, validate checksums, and track job success to avoid surprises.

- Retention: keep enough full/diff/log backups to meet compliance and operations; tier older backups to lower cost.

Troubleshoot a Full or Growing Server Transaction Log

Facing a growing server transaction log? Start with a quick diagnosis so you avoid downtime and data loss.

Step 1 — identify the blocker. Run sys.databases and check log_reuse_wait_desc to see why truncation paused — common values are LOG_BACKUP, ACTIVE_TRANSACTION, or AVAILABILITY_REPLICA.

Step 2 — free space safely. If the sql server database uses the full recovery model, take an immediate log backup. That often allows truncation once a checkpoint has advanced.

Practical fixes

- If disk is full, add disk space or move the log file to a larger volume and set sensible FILEGROWTH so single growth covers surges.

- Find long-running transactions via DMVs—ask owners to commit or break large operations into smaller batches.

- Check replication and mirroring agents; resolve network or agent failures so secondaries consume records and allow truncation.

- Avoid shrinking as a first step — it does not fix root causes and can create many VLFs; fix the blocker, then if needed shrink and regrow in one step.

| Action | When to apply | Expected result |

|---|---|---|

| Query log_reuse_wait_desc | First response | Identify exact truncation blocker |

| Immediate log backup | Full or bulk‑logged mode, disk low | Truncation clears space if checkpointed |

| Increase file or move to larger disk | Insufficient disk space or growth disabled | Prevents error 9002 and avoids emergency shrink |

| Resolve long txn or agent lag | ACTIVE_TRANSACTION or AVAILABILITY_REPLICA shown | Allows normal truncation and recovery |

After recovery: document the root cause, tune backup cadence and FILEGROWTH, and right‑size the log file to avoid repeats.

High Availability, Replication, and the Transaction Log

Why does every HA and replication feature lean on the same sequential record to stay current?

The write stream is the heart of Always On and shipping solutions. In Always On availability groups, every committed change becomes a log record that the primary sends to secondaries. Those replicas continuously apply the incoming records (redo) so the secondary database stays closely aligned with the primary.

Log shipping takes a different path: the primary takes frequent log backups, copies them to a standby, and restores on a schedule. Your recovery point objective equals how often those backups run.

How replication reads and why health matters

Transactional replication uses the Log Reader Agent to scan the write stream for marked changes and push them into the distribution database. If the agent stalls, truncation cannot proceed and the file will grow.

- Monitor redo queues: network issues, slow I/O, or too many VLFs cause lag and drift from your RPO/RTO.

- Keep autogrowth sensible: sized growth steps reduce excessive VLFs and speed replay during recovery.

- Test failovers: validate that backups, shipping, and replication workflows coexist without starving redo.

| Feature | How it uses the write stream | Operational tip |

|---|---|---|

| Always On | Ships each committed record for continuous redo | Monitor redo latency and I/O for secondaries |

| Log shipping | Copies and restores periodic log backups | Keep copy/restore jobs tight and monitor lag |

| Transactional replication | Log Reader Agent reads and forwards marked changes | Watch agent health; stalled agent blocks truncation |

Putting It All Together for Reliable, Efficient Operations Today

Want a simple checklist that prevents most space and recovery headaches? Start by picking the right recovery model for each environment—simple for noncritical, full for production OLTP, and bulk‑logged for large, planned loads.

Right‑size the log file and set fixed FILEGROWTH so you avoid excessive VLFs. Monitor percent used, VLF counts, and log_reuse_wait_desc and alert before issues become incidents.

Lock in a backup plan: routine full and differential backups plus frequent log backups that meet your RPO, and verify restore tests regularly. Let WAL and checkpoints do their job—truncation follows backups, not shrinking.

When problems appear, fix the root cause (long transactions, stalled replication, slow redo), then adjust capacity and cadence. Document runbooks and review settings quarterly so your server and data stay resilient and recoverable.