Your choice of a data schema isn’t just a technical checkbox—it’s a decision that echoes through your entire organization. It directly impacts how fast your dashboards load and whether your team can quickly answer critical business questions.

Two primary models dominate analytical database design: the star schema and the snowflake schema. At first glance, they appear similar, both built around a central fact table. But their internal structures differ dramatically.

These differences aren’t just academic. They affect real-world outcomes like query performance, storage costs, and maintenance complexity. A poor choice can lead to slow reports, bloated storage bills, and analysts waiting hours for simple answers.

We’ll break down both approaches with clear examples and practical trade-offs. You’ll see how each structure behaves and learn to select the right one for your specific data warehouse needs. This knowledge turns a complex decision into a confident, strategic move for your business.

Understanding the Data Warehouse Landscape

How you model your data warehouse directly impacts the speed and accuracy of every business decision made from it. This foundational choice determines whether your team spends minutes or hours answering critical questions.

Role of Data Models in Analytics

Your data models serve as the blueprint for your entire analytics ecosystem. They’re not just technical diagrams—they’re the difference between rapid insights and frustrating delays.

Well-designed structures let business users self-serve their information needs. Poor designs create bottlenecks where every question requires engineering intervention.

The relationships you establish between tables determine everything downstream. This includes query performance, maintenance overhead, and scalability as your data volume grows.

| Aspect | Speed-Optimized Approach | Efficiency-Focused Approach |

|---|---|---|

| Query Performance | Fast, simple joins | More complex relationships |

| Storage Complexity | Higher redundancy | Normalized structure |

| Business User Access | Easy self-service | Steeper learning curve |

Setting the Stage for Schema Design

Organizations consistently face a fundamental tradeoff in their database architecture. Do you prioritize query speed and simplicity, or storage efficiency and data integrity?

Understanding these core differences helps you make informed decisions before building pipelines. You’ll avoid investing months on the wrong foundation for your warehouse.

The right modeling approach scales gracefully with your business needs. It ensures your analytics platform grows alongside your organization’s data requirements.

Fundamentals of Star Schema Design

Business intelligence thrives when data structures align with how people actually think about metrics. The star approach delivers immediate value through its intuitive table relationships.

Central Fact Tables and Denormalized Dimensions

Your central fact table contains the measurable events your business tracks. Think of sales transactions, website clicks, or inventory movements.

Surrounding dimensions provide the “who, what, when, where” context. A product dimension might include name, category, and brand in one flat table.

This denormalization strategy deliberately stores redundant information. It eliminates complex joins that slow down queries.

| Design Approach | Join Complexity | Storage Efficiency | Query Speed |

|---|---|---|---|

| Denormalized | Simple, direct joins | Higher redundancy | Fast performance |

| Normalized | Multiple table joins | Minimal redundancy | Slower queries |

For a retail example, your sales fact table connects to product, customer, and date dimensions. Each contains all relevant attributes in single tables.

Simplifying Business Reporting

Analysts write simpler SQL with this flat structure. They join the central fact table to just one dimension table per attribute needed.

BI tools aggregate millions of transactions quickly. They perform straightforward joins between facts and their denormalized dimensions.

This approach excels at business reporting because it mirrors how people naturally analyze data. You can learn more about the tradeoffs in our guide on database normalization vs denormalization.

Exploring the Snowflake Schema Architecture

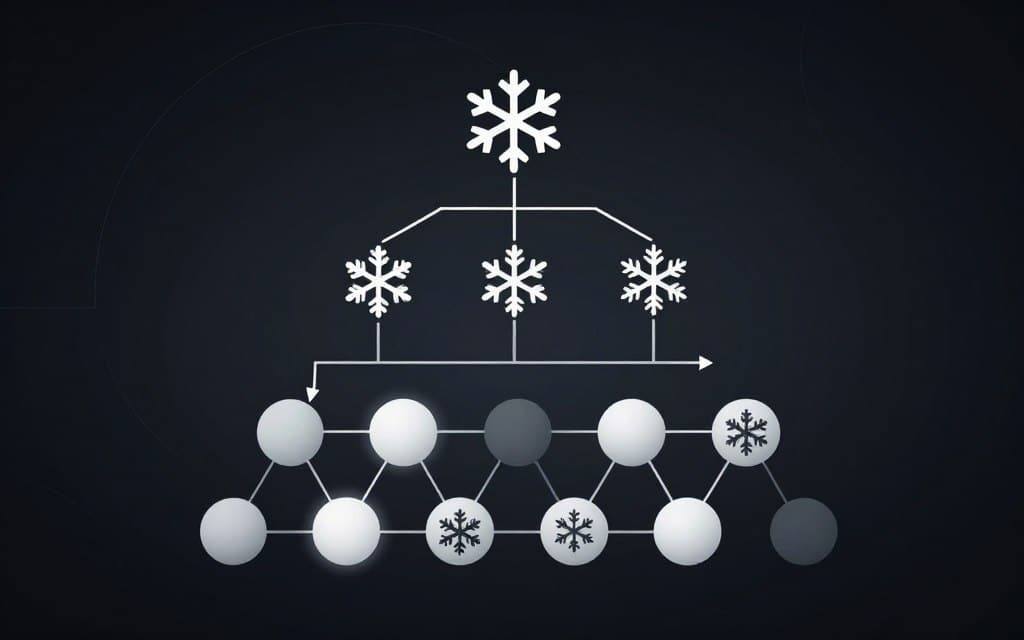

Imagine a dimension table that branches out like a crystal. This is the visual essence of the snowflake model’s normalized structure.

It uses the same central fact table as the star approach. The key difference lies in how it handles the surrounding dimensions.

Normalized Tables and Hierarchical Relationships

Here, dimension tables are broken down to eliminate redundancy. This process, called normalization, creates a web of hierarchical relationships.

Take a product dimension. Instead of one flat table, you might have separate tables for Product, Sub-category, Category, and Department.

Your sales fact table still holds the core transaction data. But it now connects to this refined, interconnected set of tables.

This design means “United States” appears just once in a Country table. It doesn’t repeat across millions of customer records.

The result is significant storage savings for large datasets. Updating a category name becomes a simple, single-row change.

The tradeoff? Your queries require more joins to gather all the necessary information from across the normalized hierarchy.

Deep Dive into star schema vs snowflake schema: Performance, Storage, and Update Tradeoffs

Every second counts when your analysts are waiting for reports to load—your schema design determines those seconds. The core differences between these approaches create real tradeoffs that affect your entire analytics operation.

Query Speed and Join Complexity

The flat structure of one approach delivers faster query performance. Analysts write simpler SQL with direct joins to single-level dimensions.

In contrast, the normalized model requires multiple table connections. This increases join complexity and can slow down large dataset queries.

Balancing Redundancy with Data Integrity

Data duplication becomes a critical consideration for storage efficiency. One model stores repeated values extensively across records.

The normalized approach maintains each unique value just once. This reduces storage requirements but demands more complex relationships.

Updates reveal another key difference. Changing a category name might affect millions of rows in one structure versus a single entry in the other.

| Design Factor | Flat Structure | Normalized Approach |

|---|---|---|

| Query Performance | Faster execution | More joins required |

| Storage Efficiency | Higher redundancy | Optimized space usage |

| Data Updates | Complex consistency | Single-point changes |

| Implementation Speed | Quick setup | Detailed modeling |

| Maintenance Overhead | Growing complexity | Stable management |

| Debugging Clarity | Obscured relationships | Clear data lineage |

Performance and Query Optimization in Data Warehouses

The difference between a dashboard that loads in seconds versus minutes often comes down to how your queries navigate table relationships. Your data warehouse’s performance directly impacts how quickly your team gets answers.

Modern platforms have narrowed traditional gaps, but fundamental design choices still matter. Let’s examine how different structures handle real analytical workloads.

Real-World Examples of Fast Queries

Imagine analyzing sales revenue by product department. A flat structure connects your sales fact table directly to a comprehensive product dimension table.

This approach requires just one join operation. Your query executes rapidly, even across millions of transaction records.

Contrast this with a normalized approach. The same analysis might need four or five joins through multiple dimension tables.

Each additional join increases processing time significantly. Without proper indexing, performance degradation becomes noticeable.

Cloud data warehouses change this equation dramatically. Platforms like BigQuery use columnar storage and intelligent query planning.

They optimize multi-join operations far more efficiently than legacy systems. Materialized views can pre-compute common joins, effectively mimicking flat structure performance.

Your actual query speed depends on multiple factors beyond table design. Data volume, indexing strategy, and compute resources all play critical roles.

The right balance ensures your team gets fast answers without compromising data integrity.

Storage Efficiency and Maintenance Challenges

Monthly cloud storage bills reveal the true cost of data redundancy that different architectures create. What starts as minor space differences can grow into significant expenses over time.

You’ll face ongoing maintenance challenges regardless of which approach you choose. The key lies in understanding how each structure handles these operational realities.

Comparing Storage Overhead in Different Schemas

Redundancy becomes your biggest storage concern with denormalized designs. Every repeated value across millions of records adds up quickly.

Consider a product dimension table with 50,000 items. Department names repeat for each product instead of storing them once.

This duplication creates substantial overhead. Your cloud costs can increase by hundreds of dollars monthly.

Normalized approaches dramatically reduce this waste. They maintain each unique piece of information in single locations.

Maintenance presents the opposite challenge. Updating values requires changing millions of rows in one structure versus single records in the other.

Data consistency suffers when values repeat across tables. Misspellings or variations break your reports and analytics.

The storage advantage compounds as your warehouse grows. Small differences become massive over years of data accumulation.

Schema Design in the Modern Cloud Data Era

Cloud platforms have rewritten the rulebook for analytical database architecture. They give you unprecedented flexibility in your modeling decisions.

The rigid tradeoffs that once defined warehouse design are now blurred. Cloud-native innovations handle complexity behind the scenes.

Utilizing Materialized Views and Columnar Storage

Materialized views let you maintain normalized structures while achieving star-like query speeds. They pre-compute complex joins and aggregations automatically.

Columnar storage transforms how your warehouse accesses information. It fetches only the specific fields each query needs.

This approach dramatically reduces I/O overhead. Your system scans data much faster regardless of your underlying design.

Cloud Services and Auto-scaling Compute

Auto-scaling compute eliminates rigid resource constraints. Platforms dynamically allocate nodes for complex workloads.

Query optimizers automatically rewrite inefficient SQL. They reorder joins and push predicates down to minimize data movement.

Caching layers store results from recent queries. Repeated analyses return instantly without expensive re-execution.

| Cloud Feature | Performance Impact | Maintenance Benefit |

|---|---|---|

| Materialized Views | Near-instant complex queries | Automated join optimization |

| Columnar Storage | Reduced data scanning | Efficient resource usage |

| Auto-scaling Compute | Dynamic resource allocation | Cost-effective performance |

| Intelligent Caching | Instant repeated queries | Reduced processing load |

You can now design structures that prioritize data integrity. Cloud features preserve the responsiveness business users demand.

When to Choose Star Schema for Simplicity and Speed

Some data warehouse decisions come down to a simple choice: speed versus complexity. The flat structure delivers immediate performance advantages that transform user experience.

Ideal Use Cases for a Flat Structure

Choose this approach when dashboard responsiveness matters most. Business leaders expect sub-second query times on sales dashboards with multiple filters.

The simplified join structure delivers that speed consistently. Your data structure’s stability also determines whether this makes sense.

If product categories and customer segments rarely change, the maintenance burden stays manageable. Business user accessibility strongly favors this design.

Analysts without deep SQL expertise can write queries more easily. They don’t need to navigate through multiple normalized table relationships.

Traditional BI tools like Tableau and Power BI perform optimally against flat dimension tables. Their drag-and-drop interfaces map naturally to this structure.

E-commerce operations monitoring daily sales represent an ideal scenario. The sales fact table joins to denormalized product and customer dimensions.

Small to medium data volumes make this particularly attractive. Storage redundancy costs remain negligible compared to performance benefits.

High-frequency reporting scenarios benefit most from this design. If your organization runs the same reports hundreds of times daily, optimizing for read speed delivers better ROI. You can explore the tradeoffs further in our guide on database normalization vs denormalization.

Leveraging Snowflake Schema for Scalability and Data Integrity

Large enterprises face a critical challenge: maintaining consistency across millions of records while accommodating constant change. The hierarchical approach delivers precisely this capability when your data complexity demands it.

Maintaining Consistency in Evolving Datasets

Your normalized structure prevents data duplication across massive dimension tables. This becomes crucial when tracking millions of customers across complex geographic hierarchies.

Each unique value appears just once in the entire system. Storage costs drop significantly compared to redundant approaches.

Updates transform from massive operations into simple single-row changes. Restructuring product categories or sales territories becomes manageable rather than overwhelming.

Multinational retailers exemplify the ideal use case. They connect sales data to normalized product, category, and department tables.

This approach mirrors real-world business hierarchies accurately. Your ETL pipelines simplify when updating discrete normalized tables.

Choose this method when your organization naturally operates through layered structures. The scalability advantages compound as your business grows into new markets.

Final Thoughts on Modeling Your Data Warehouse

The real power of your data warehouse emerges when you stop treating schema selection as a binary choice. Most teams successfully blend both approaches based on actual usage patterns.

Keep frequently queried dimensions flat for speed—think time, product, and customer tables. Normalize complex hierarchies like locations or employee structures for easier maintenance.

Modern cloud platforms make hybrid modeling practical through materialized views and intelligent optimization. Your business users get fast performance without seeing the underlying complexity.

Start with what fits your immediate needs, then evolve as you identify bottlenecks. The goal isn’t architectural purity—it’s building a maintainable system that delivers fast, accurate insights as your organization grows.