Your analytical queries crawl when they should sprint—users wait impatiently while your system struggles with basic requests. This frustrating slowdown often traces back to foundational choices made during the initial planning phase.

When built correctly, these multidimensional structures deliver lightning-fast insights from massive datasets. They transform complex business intelligence questions into immediate answers. But poor architectural decisions create bottlenecks that ripple through your entire analytical workflow.

The difference between a high-performance system and a sluggish one comes down to strategic planning and execution. We’ll show you how to avoid the common pitfalls that sabotage query speed and storage efficiency.

You’ll discover why schema selection impacts everything from data retrieval to maintenance overhead. Learn how proper aggregation techniques can cut processing time dramatically. We’ll reveal which indexing approaches actually deliver results at enterprise scale.

This isn’t theoretical advice—these are battle-tested methods that handle millions of records without breaking stride. Whether you’re building from scratch or optimizing an existing implementation, these strategies protect your organization’s ability to make timely, data-driven decisions.

Understanding the OLAP Cube Landscape for Business Intelligence

While your operational systems efficiently record every transaction, they weren’t built to answer the complex analytical questions that drive strategic decisions. This creates a fundamental gap between data collection and meaningful insight extraction.

The Role of OLAP in Data Analysis

Online Analytical Processing specializes in transforming raw information into actionable intelligence. Unlike transactional systems that handle day-to-day operations, this approach delivers answers across millions of records in seconds.

Think of your sales database recording individual transactions versus an analytical system revealing regional performance trends. The difference lies in pre-organized data structures that anticipate business questions. This multidimensional approach to data analysis enables rapid slicing and drilling without complex query execution.

Key BI and Data Warehousing Concepts

The data warehouse serves as the foundation, consolidating information from multiple sources into a unified view. This centralized repository eliminates the limitations of scattered databases and provides consistent business intelligence across your organization.

Understanding the distinction between different database types is crucial for effective implementation. For a deeper dive into how these systems differ, explore our guide on OLAP vs OLTP databases and their respective strengths.

When properly implemented, this analytical landscape transforms how your team accesses and interprets critical business information. The result is faster decision-making and more accurate strategic planning.

OLAP Cube Design Best Practices: Essential Concepts

Building a high-performance analytical system requires mastering three core components that form its foundation. Think of your structure as resting on three pillars: dimensions that organize information logically, measures that quantify business outcomes, and relationships that connect everything intelligently.

Dimensions aren’t just database tables—they’re the lenses through which users explore information. Each dimension contains attributes that map directly to business concepts like customer regions or time periods. These attributes determine how effectively users can filter and group results.

Measures represent the numbers that drive decisions: revenue, quantity sold, customer lifetime value. But you can’t afford to include every possible metric. Each addition increases complexity and impacts system performance.

Start with the measures you know users need immediately. Add attributes as requirements evolve. This focused approach beats the “include everything” strategy that bogs down systems.

The relationships between dimensions matter just as much as the dimensions themselves. Properly defining how Year relates to Quarter, or Customer relates to Region, ensures query accuracy and speed.

Get these fundamentals wrong, and you’ll face months of troubleshooting confusing results and slow queries. Master them now, and advanced optimizations fall into place naturally.

Advanced Data Modeling and Schema Strategies

The architecture you select for your multidimensional data model determines whether your analytics deliver instant insights or frustrating delays. Your choice between foundational approaches directly impacts query speed and maintenance overhead.

This decision isn’t just about storage—it’s about creating a structure that anticipates how users will explore information.

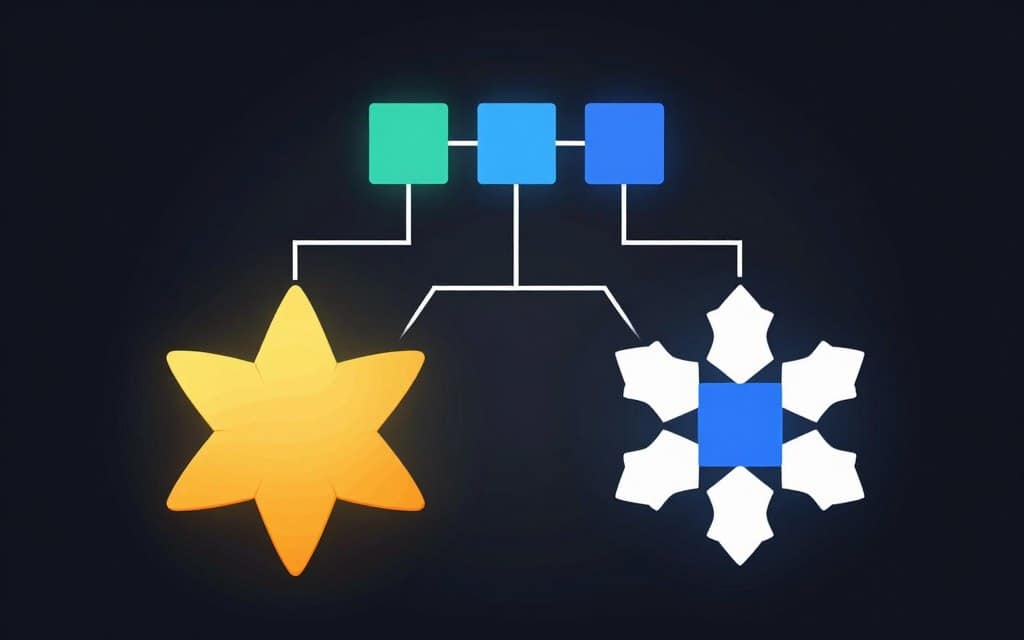

Star Schema Versus Snowflake Schema

Think of the star approach as a hub-and-spoke system. A central fact table connects directly to all dimension tables. This creates one join per dimension for faster queries and simpler maintenance.

The snowflake method normalizes those dimension tables further. It breaks them into sub-dimensions to save storage space. But you pay the price in query complexity with more join operations.

| Feature | Star Schema | Snowflake Schema |

|---|---|---|

| Query Performance | Faster due to fewer joins | Slower due to complex joins |

| Storage Efficiency | Higher redundancy | Better normalization |

| Maintenance Complexity | Simpler structure | More complex relationships |

| Ideal Use Case | Performance-critical analytics | Storage-constrained environments |

In most analytical scenarios, the star approach wins. Query speed matters more than the storage savings from normalization.

Optimizing Dimensions and Measures

Every additional attribute increases storage needs and processing time. Include only essential dimensions and measures to keep your model lightweight.

Do you need a customer’s street address for sales analysis? Probably not. Focus on Customer ID, Region, and Segment instead.

Aggregate data appropriately to reduce detail. Pre-aggregating daily sales to weekly rollups can transform a 10-second query into a sub-second response.

This strategic simplification protects your system’s performance and your team’s ability to make timely decisions.

Storage and Aggregation Optimization Techniques

Your data’s speed hinges on how you store and pre-calculate information. Without smart optimization, even the most powerful hardware struggles under the weight of billions of records.

Strategic planning here transforms sluggish reports into instant insights. We’ll focus on two powerful methods to achieve this.

Pre-Aggregation Methods for Faster Queries

Why calculate the same totals repeatedly? Pre-aggregation answers your most common questions before anyone even asks. This technique creates summary tables with pre-computed results.

Focus on your top 20 user queries first. Building aggregations for these patterns can satisfy over 80% of requests instantly. Materialized views store these calculations physically.

A query for quarterly revenue by region then retrieves a pre-existing answer. It avoids scanning millions of raw transaction rows.

Effective Partitioning Strategies

Partitioning breaks your massive fact table into manageable pieces. You typically divide data by time, like months or years. This organization is a game-changer for performance.

When you query for March sales, the system scans only the March partition. It ignores the other eleven months of data entirely. This partition pruning can yield a 12x reduction in I/O operations.

Maintenance also becomes far simpler. You can refresh or backup a single partition without touching historical records.

| Strategy | Primary Benefit | Impact on Query Performance | Maintenance Advantage |

|---|---|---|---|

| Pre-Aggregation | Eliminates on-the-fly calculations | Sub-second response for common queries | Reduces computational load on the server |

| Partitioning | Limits data scanned per query | Dramatically faster filtered searches | Enables targeted data processing and updates |

Combining these techniques creates a robust foundation for high-performance analytics. Your system handles large-scale operations with ease.

Indexing, Compression, and MDX Query Enhancements

The right indexing strategy can transform sluggish data exploration into lightning-fast insights—but choosing wrong costs you precious seconds on every query. These techniques work together to optimize your system’s performance.

Bitmap vs. B-Tree Indexing

Bitmap indexing excels with categorical attributes containing few distinct values. Think gender (Male/Female) or product categories. Each value maps to a simple binary array for rapid filtering.

When you search for “Female customers in the Western region,” bitmap indexes perform blazingly fast Boolean operations. They avoid scanning entire columns of data.

B-tree indexes handle high-cardinality attributes like Customer ID or Transaction ID. They organize millions of unique values hierarchically for efficient lookups.

| Index Type | Best For | Performance Impact | Storage Efficiency |

|---|---|---|---|

| Bitmap Indexing | Low-cardinality attributes (Gender, Status) | Fast filtering and grouping operations | Moderate storage overhead |

| B-Tree Indexing | High-cardinality attributes (Customer ID) | Efficient hierarchical lookups | Higher storage requirements |

Smart Compression and MDX Optimization

Columnar compression reduces storage by 10x or more. Repeated values like “California” appear millions of times but store once with pointers. Dictionary encoding transforms text into numeric codes.

Optimize your MDX queries by eliminating unnecessary NON EMPTY clauses. They force the engine to check every cell for data, adding overhead.

Use SCOPE statements to target specific calculations instead of applying complex logic across all hierarchy levels. Calculating year-over-year growth only for current data runs 5x faster than applying it to every year.

Derived and Calculated Measures for Efficient Analysis

Imagine a dashboard that instantly displays complex metrics like profit margin, without forcing the server to recalculate them for every viewer. This speed comes from a crucial choice: precomputing your most important measures.

These numeric values are the core of your analysis. Users slice and dice them to find answers. But how you create them impacts performance dramatically.

Precomputing Measures to Reduce Runtime Calculation

Every time a query runs a complex formula, it consumes CPU cycles. This happens across millions of rows of data. The delay adds up quickly for your users.

Precomputation solves this. You calculate the measure once during processing. Then, you store the result as a physical value.

For example, instead of calculating SUM(Quantity * Unit Price) for every “Total Revenue” request, you store the final revenue figure. Queries then retrieve this pre-existing answer instantly.

This strategy shifts the computational burden to scheduled, off-hour processing. It frees up resources during peak usage times.

| Measure Type | Calculation Timing | Performance Impact | Best Use Case |

|---|---|---|---|

| Precomputed Measure | During system processing | Extremely fast retrieval | Frequently used metrics (Revenue, Profit) |

| Runtime Calculation | During user query execution | Adds latency, uses server CPU | Ad-hoc, user-specific analysis |

Aim to precompute about 80% of your common measures. Keep the remaining 20% for flexible, on-the-fly analysis. This balance delivers both speed and adaptability.

The trade-off is slightly longer processing times and a bit more storage. This is a minor cost for the significant performance gains you achieve.

Incremental Processing and Data Refresh Best Practices

Your data refresh strategy shouldn’t bring your entire analytical system to a halt every time you add new information. Reprocessing everything is like repainting your entire house because one wall got scuffed—it works, but it’s incredibly inefficient.

Instead, target only what has changed. This incremental processing approach leaves untouched information exactly where it is. You save massive amounts of time and server resources.

Leveraging Change Data Capture (CDC)

Change Data Capture (CDC) is your secret weapon. It tracks modifications—inserts, updates, deletes—at the source database. Your refresh operation reads this log instead of scanning entire tables.

Imagine your system gets 50,000 new transactions daily. CDC identifies exactly those rows. Incremental processing adds them to the correct partition in minutes, not hours.

Schedule this maintenance during off-peak hours, like 2 AM. This prevents refresh operations from competing with user queries for server power.

If you manage multiple analytical models, stagger their refresh times. Don’t slam the server with simultaneous jobs.

Here’s a critical final step. After updating a dimension, you must run Process Index to rebuild affected aggregations. Skip this, and watch query performance crater. Automation is non-negotiable for consistent, reliable data updates.

Ensuring Scalability for Large-Scale Data Operations

Scalability isn’t a future concern—it’s the bedrock of your system’s ability to handle tomorrow’s data demands today. Your current setup might manage 100 million rows, but what happens at a billion? Query times can quintuple without a solid plan.

Parallel processing is your first line of defense. Multi-threading distributes query execution across CPU cores. A 16-core server can scan 16 partitions at once instead of one after another.

Strategic partitioning enables this parallelism. Monthly splits allow 12 concurrent operations for an annual query. This maximizes your hardware’s potential.

Cloud services like Azure Analysis Services and Google BigQuery offer elastic scaling. They allocate more power during peak hours and scale back down. You handle seasonal spikes like Black Friday without overprovisioning for average loads.

| Scaling Strategy | Primary Mechanism | Impact on Performance | Ideal Scenario |

|---|---|---|---|

| Horizontal Scaling | Adds more servers to distribute workload | Handles increased user concurrency | Rapid, unpredictable growth in data volume |

| Vertical Scaling | Upgrades existing server CPU/RAM | Boosts speed for complex calculations | Steady growth requiring more powerful hardware |

| Cloud Elasticity | Dynamically allocates compute resources | Manages variable demand cost-effectively | Seasonal peaks or unpredictable query patterns |

Architecture matters at a fundamental level. Dimensions with over 10 million members demand 64-bit systems. A 32-bit environment hits a memory wall, crippling performance.

Plan for a minimum of 3x growth. If you process 50GB now, architect for 150GB. Replatforming under pressure is expensive and disruptive. Building for scale from the start protects your investment.

Integrating OLAP Cubes with BI Tools like Excel and SharePoint

The real power of your analytical system emerges when users can explore information without technical barriers. Seamless integration with familiar tools like Microsoft Excel and SharePoint turns complex data into everyday business intelligence.

This connection empowers your team to answer their own questions. They gain immediate access to the insights they need to drive decisions.

Using Self-Service Analytics for User Empowerment

Excel connects directly to your multidimensional structures through its native PivotTable feature. Users simply drag and drop dimensions and measures to slice information. Your marketing team can analyze last quarter’s campaign performance by region in minutes.

They share interactive workbooks with stakeholders. Everyone sees live, refreshed data instead of outdated static reports.

SharePoint serves as the central hub for publishing dashboards. You provide teams with interactive reports that update automatically. This eliminates the chaos of emailing multiple spreadsheet versions.

You maintain strict control over business logic in a single location. Define a key performance indicator like “Days Sales Outstanding” once. Every user sees identical thresholds and color-coding in their tools.

SQL Server Reporting Services (SSRS) pulls from the same source. This allows for pixel-perfect reports for executives alongside the flexible analysis power users crave.

| Tool | Primary Role | Key Advantage | Ideal User |

|---|---|---|---|

| Microsoft Excel | Interactive Analysis & Exploration | Leverages widespread user familiarity with PivotTables | Business analysts, department managers |

| Microsoft SharePoint | Centralized Publishing & Sharing | Provides a secure, managed platform for dashboards | Teams requiring standardized, auto-updating reports |

| SQL Server Reporting Services (SSRS) | Formatted, Production Reporting | Delivers precise, print-ready reports (PDF, etc.) | Executives, finance departments |

This integration strategy shifts analytical power from IT to business users. They find answers instantly instead of waiting for report requests. It’s a practical path to a more agile, data-driven organization.

Optimizing Dimensions, Aggregations, and KPI Strategies

The intelligence of your analytical model hinges on how you define attribute relationships and hierarchies. These structures are not just for organization—they directly enable performance optimizations and ensure accurate data analysis.

Designing Strong Dimension Attributes and Relationships

You must create attribute relationships wherever they exist in your source data. Defining that City rolls up to State, and State to Country, is fundamental. This tells the engine how to optimize storage and processing.

Be ruthless with your attribute selection. If an attribute like “Customer Middle Initial” won’t be used for filtering or grouping, exclude it. Each one adds complexity.

Time dimensions require special attention. A [Month] attribute needs a composite key of [Year] and [Month Name]. This distinguishes January 2023 from January 2024. A single-column key creates ambiguous aggregations.

Hierarchies must respect cardinality. A logical flow is Year → Quarter → Month. Reversing this order, like Quarter → Year, violates logic and confuses users.

| Attribute Type | Include? | Reasoning |

|---|---|---|

| Customer Region | Yes | Essential for sales territory analysis |

| Exact Timestamp (to the second) | No | Rarely used for grouping; harms performance |

| Product Subcategory | Yes | Vital for drilling down from category |

Building Effective Aggregations and Monitoring KPIs

Aggregation design balances storage costs against query speed. Build pre-calculated summaries for frequent combinations like Year-Region-Product. Skip rare ones like Day-Customer-SKU.

Key Performance Indicators (KPIs) transform raw numbers into business scorecards. Instead of showing “1,200 sales orders,” a KPI displays “% Orders Shipped Same Day” with color-coded thresholds.

Define these thresholds centrally. For example, resolution times: under 48 hours (green), 48-72 hours (yellow), over 72 hours (red). This ensures consistency across all dashboards.

Continuously monitor KPI trends. A drop in your on-time shipment percentage from 95% to 87% signals an operational issue before it impacts customer satisfaction. This proactive analysis is the ultimate goal.

Final Thoughts on Implementing Optimal OLAP Cube Strategies

When properly implemented, these methodologies deliver more than just speed—they create a competitive advantage that grows with your business. The right approach transforms your data warehouse from a storage facility into an intelligence engine.

Start with your most critical analytical model. Apply star schema design, build targeted aggregations, and implement incremental processing. Measure the before-and-after performance gains to demonstrate value quickly.

Organizations following these best practices report 10x query improvements and 50% storage reductions. Your business intelligence platform becomes the asset it should be—executives get real-time dashboards, analysts explore freely, and IT stops firefighting.

The investment pays daily dividends through faster decisions and deeper insights. As data volumes grow, your well-architected foundation scales without complete redesigns. This isn’t a destination but an ongoing practice of refinement and adaptation.