You’re staring at a mountain of raw numbers—sales logs, web traffic, inventory counts—but the real story remains hidden. That critical gap between having information and gaining insight is where your data warehouse earns its keep.

The solution isn’t more data; it’s smarter structure. This is where two essential components come into play. One captures the measurable events—the quantitative heartbeat of your operations.

The other provides the rich context—the who, what, where, and when that gives those numbers meaning. Together, they transform chaotic spreadsheets into a navigable landscape for business intelligence.

With demand for real-time analytics skyrocketing, understanding these foundational elements is no longer optional. It’s essential for making confident, data-driven decisions that keep you competitive.

We’ll break down how these components work individually and, more importantly, how they work in concert. You’ll see how to structure your information for maximum clarity and performance.

Understanding the Fundamentals of Data Warehousing

The gap between collecting business metrics and extracting meaningful intelligence often lies in how you structure your information. Effective data warehousing transforms chaotic streams into organized insights.

At the core of every successful data warehouse, two complementary structures work together. They create the foundation for powerful analytics.

The Role of Fact Tables in Business Analytics

Fact tables serve as your quantitative command center. They capture measurable events like sales transactions, website clicks, or inventory movements.

These structures store numerical values you can aggregate and analyze. Think revenue totals, unit counts, or performance metrics.

The primary strength of fact tables lies in their ability to handle large volumes of numerical data efficiently. They’re optimized for calculations and trend analysis.

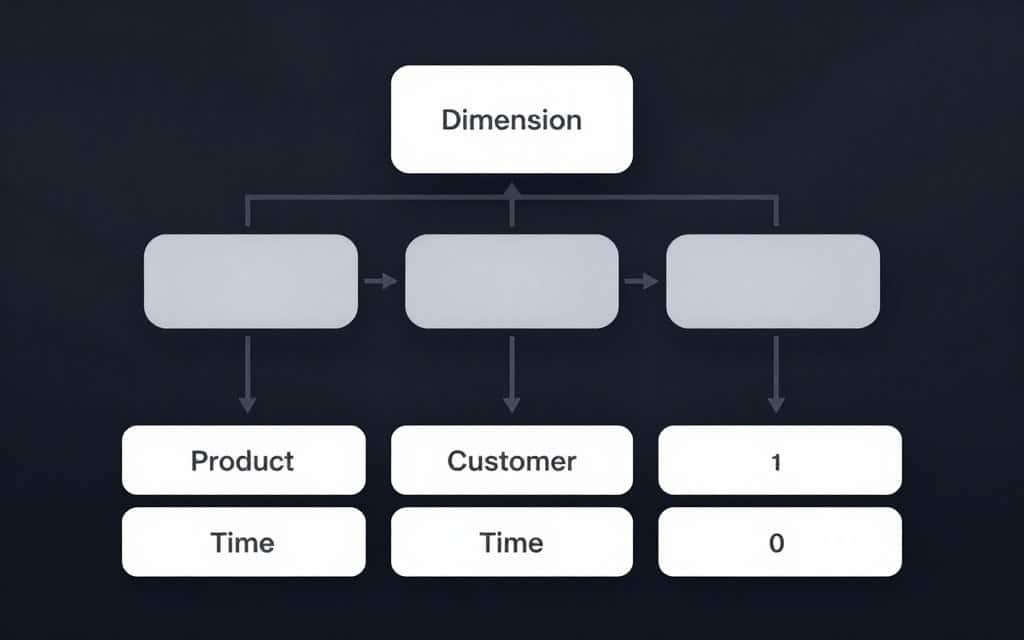

How Dimension Tables Enrich Data Context

Dimension tables provide the descriptive backdrop for your numbers. They answer the critical questions: who, what, when, and where.

Without this contextual layer, your metrics would lack meaning. You’d see sales figures but not know which products or customers generated them.

Dimension tables enable you to slice and analyze data from multiple perspectives. They transform raw numbers into actionable business intelligence.

| Feature | Fact Tables | Dimension Tables |

|---|---|---|

| Primary Content | Numerical metrics and measures | Descriptive attributes and categories |

| Data Type | Quantitative values | Qualitative information |

| Primary Function | Aggregation and calculation | Filtering and categorization |

| Typical Size | Large volume of records | Smaller set of descriptive entries |

| Query Performance | Optimized for numerical operations | Fast lookups and filtering |

Exploring Fact Tables: Measuring Business Events

When you need to track actual business performance, fact tables become your quantitative command center. They capture every measurable event that matters to your operations.

These structures store the numbers you can sum, average, and analyze for critical insights. Each row represents one specific business occurrence.

Key Characteristics and Metrics

A fact table’s primary strength lies in handling numerical, additive information. You can aggregate these measures to answer key business questions.

Every row corresponds to a single event like a sales transaction or website click. This granular approach enables detailed analysis of individual occurrences.

Fact tables connect to dimension tables through foreign keys that create analytical relationships. These keys let you slice your metrics from multiple perspectives.

You’ll typically work with three main types of these analytical structures. Each serves a distinct purpose in your data architecture.

Transaction fact tables capture individual events at the most detailed level. Think of every single sale or inventory movement getting its own record.

Periodic snapshot tables take regular measurements like daily inventory counts. They show status at consistent intervals rather than individual actions.

Accumulating snapshot tables track processes as they evolve through stages. The same row updates to show progression from start to completion.

These structures are typically denormalized for faster query performance. This design choice prioritizes analytical speed over storage efficiency.

Diving into Dimension Tables: Adding Context and Clarity

Without proper context, your sales figures are just numbers on a screen—dimension tables provide the crucial ‘who, what, when, and where’ that turns data into decisions. They’re the descriptive backbone that gives meaning to your quantitative measurements.

Descriptive Attributes and Contextual Details

Each dimension table contains qualitative information that lets you filter and segment your analysis. You’ll find customer names, product categories, and geographic regions that add depth to your reporting.

These tables typically use surrogate keys for simpler relationship management. This approach makes handling changes much more efficient than relying on natural business identifiers.

Unlike rapidly updating transactional data, dimension tables change at a slower pace. They represent stable business entities like your product catalog or customer segments.

You’ll encounter several types of these contextual structures in practice. Conformed dimensions ensure consistent definitions across different business processes.

Role-playing dimensions serve multiple analytical purposes within the same schema. A single date dimension might track order, ship, and delivery dates simultaneously.

Slowly changing dimensions maintain historical accuracy when attributes evolve over time. This preserves context even when customers move or products get recategorized.

fact tables vs dimension tables

Your analytics capability hinges on understanding how quantitative events and descriptive context work together in perfect harmony. These two structures form the backbone of every effective data warehouse.

Here’s the essential distinction you must grasp. One component stores measurable business metrics while the other provides the descriptive framework that gives those numbers meaning.

A fact table stores numerical measurements like sales amounts or transaction counts. It captures what actually happened in your business operations.

Each fact table maintains foreign keys that create relationships with your descriptive structures. This connection enables multidimensional analysis of your quantitative data.

The dimension table contains qualitative attributes that answer critical business questions. You’ll find customer details, product categories, and time periods that enrich your analysis.

Every dimension table uses a primary key that appears as a foreign key in your fact tables. This relationship transforms raw numbers into actionable intelligence.

Think of it this way: one answers “how much” while the other answers “who, what, where, and when.” You need both components working together to extract true business value from your information.

The partnership between these structures makes your entire analytical system functional. One provides the measurements, the other provides the context, and their connection creates meaningful reporting.

Mapping Schema Designs: Star Schema vs Snowflake Schema

Now that you grasp the core components, the next logical step is arranging them for optimal performance. Your choice between a star schema and a snowflake schema directly impacts query speed and maintenance ease.

Both designs organize your central fact table and descriptive dimension tables. Their structural differences, however, lead to distinct advantages for your business intelligence needs.

Benefits of a Star Schema

The star schema offers a beautifully simple and intuitive layout. Your fact table sits directly connected to flat, denormalized dimension tables.

This design drastically reduces the number of joins needed for queries. Business users find it easy to understand and build reports.

You get faster performance and simpler maintenance. The straightforward structure integrates seamlessly with tools like Power BI.

Understanding the Complexity of a Snowflake Schema

A snowflake schema normalizes your dimension tables to eliminate data redundancy. It breaks them into multiple related tables for better organization.

This approach can improve data integrity and save storage space. The trade-off is increased complexity with more tables and joins.

Queries may run slower due to this intricate structure. It prioritizes a normalized data structure over raw query speed.

| Design Aspect | Star Schema | Snowflake Schema |

|---|---|---|

| Primary Goal | Query Performance & Simplicity | Storage Efficiency & Normalization |

| Dimension Structure | Flat, Denormalized | Normalized, Hierarchical |

| Join Complexity | Minimal Joins | Multiple Joins Required |

| Best For | Fast-paced Business Intelligence | Complex, Storage-Sensitive Environments |

Your decision hinges on whether you value speed and simplicity or optimized storage. For most analytics, the star schema delivers superior performance.

Optimizing Data Warehousing Performance

Performance tuning transforms your warehouse from a passive repository into an active analytical engine. The right optimizations make the difference between sluggish queries and instant insights.

Strategic enhancements ensure your system responds quickly to business demands. You’ll move from waiting for reports to getting real-time answers.

Indexing Strategies and Query Efficiency

Smart indexing separates adequate systems from exceptional ones. Composite indexes on foreign keys accelerate joins between different data structures.

Bitmap indexes work wonders for low-cardinality fields in descriptive components. They enable lightning-fast filtering operations on categories like regions or product types.

Partitioning by time intervals lets databases skip irrelevant information entirely. Your queries run faster when they only scan relevant date ranges.

Columnar storage formats like Parquet dramatically reduce table size while maintaining speed. This matters when your quantitative components contain millions of rows.

Materialized views pre-calculate commonly requested summaries. Instead of summing transactions repeatedly, you query pre-aggregated totals.

Query caching captures frequently accessed information in memory. Multiple users get instant responses when requesting the same dashboard.

Regular performance audits reveal slow queries and unused indexes. Monitoring prevents errors from propagating throughout your entire system.

Enhancing Business Intelligence Insights with Structured Data

Interactive dashboards transform from static reports to decision-making tools with the right data architecture. Your organized information foundation powers every analytical visualization your team relies on.

Modern BI platforms like Power BI and Tableau are engineered to work seamlessly with star schema designs. This compatibility turns your structured information into actionable business intelligence.

Leveraging Data for Effective Dashboards

The clean separation between quantitative measures and descriptive attributes creates intuitive reporting experiences. Business users can drag and drop fields without writing complex queries.

Dimension tables provide the filtering capabilities that make dashboards truly interactive. Users slice sales information by region or drill into customer segments with simple clicks.

Consider a sales executive analyzing regional performance. They combine transaction measures with customer demographics and product categories. This reveals which items sell best in specific markets.

Your dashboards load in seconds instead of minutes, even with millions of records. Pre-aggregated measures combined with rich contextual details create responsive analytical experiences.

When your data warehouse is properly structured, you deliver actionable intelligence that transforms organizational decision-making. The right architecture turns raw numbers into strategic insights.

Real-World Applications in Business Intelligence

Across retail, finance, and healthcare, organizations are solving concrete challenges with these foundational components. Let’s examine how different industries implement these structures for actionable insights.

Retailers build sales fact structures that capture every transaction with precise details. Each record includes amount, quantity, timestamp, and connections to customer, product, and location elements.

E-commerce platforms track order fulfillment through multiple stages. They record milestones from payment confirmation to final delivery with exact timestamps.

Financial institutions use balance snapshot structures for monthly account tracking. This approach enables trend analysis without storing every individual transaction.

Marketing teams employ factless structures to monitor event attendance patterns. They simply record which customers attended specific events at precise times.

Healthcare organizations combine patient visit records with provider and facility details. This enables analysis of treatment patterns and outcome correlations.

Manufacturing companies track production metrics against equipment and shift data. They optimize operations by analyzing defect rates and machine efficiency.

These practical implementations demonstrate how measurable events and descriptive context work together. Each example shows the power of structured data analysis in real business scenarios.

Common Challenges and Best Practices in Data Modeling

Even the most elegant data warehouse design can face real-world hurdles that threaten data integrity and query performance. Let’s tackle the most common issues head-on with practical solutions.

Addressing Data Redundancy and Integrity

Denormalized schemas boost speed but can lead to repeated information. This repetition consumes storage and risks inconsistencies if updates are not managed carefully.

The solution lies in stable surrogate keys and clear update procedures. These keys remain constant even when business attributes change, preserving historical accuracy.

Maintaining precise granularity in your core structures is non-negotiable. Mixing summary and transaction-level data in one place creates confusion and hampers accurate analysis.

Strategies for Consistent Data Quality

Quality begins at the source. Validate incoming information before loading it to prevent pollution of your analytical foundation.

Implement regular audits to catch orphaned records and measure anomalies. This proactive monitoring stops small issues from cascading into major system failures.

Pre-aggregate common calculations using materialized views. This balances the need for detailed records with the reality that most queries require summarized results for speed.

Your data environment is a living system, not a one-time project. Ongoing maintenance and optimization are essential for long-term reliability and performance.

| Challenge | Root Cause | Best Practice Solution |

|---|---|---|

| Data Redundancy | Denormalized Schema Design | Use Surrogate Keys, Controlled Update Processes |

| Integrity Risks | Orphaned Records, Update Errors | Regular Foreign Key Audits, Slowly Changing Dimension Strategies |

| Performance Bottlenecks | Mixed Granularity, Lack of Aggregation | Clear Grain Definition, Materialized Views for Summaries |

| Quality Degradation | Poor Data Ingestion Controls | Source Validation, Automated Testing at Ingestion |

Integrating AI-Driven Data Observability for Reliable Warehousing

Manual data quality checks can’t keep pace with modern data volumes—AI-powered observability platforms automate anomaly detection before business impact. Your warehouse needs continuous monitoring to maintain trust in decision-making.

Monitoring, Anomaly Detection, and Proactive Maintenance

Platforms like Monte Carlo and Acceldata transform reactive firefighting into proactive maintenance. They learn your normal patterns—daily transaction volumes, typical update schedules, expected measurement ranges.

These tools automatically flag deviations that indicate potential problems. You’ll catch issues before stakeholders notice wrong dashboard numbers.

AI-driven validation ensures foreign keys remain valid and measures stay within expected ranges. The system monitors required attributes and row counts against expectations.

Performance monitoring identifies slow queries and optimization opportunities. You’ll see exactly which joins create bottlenecks or which partitions get scanned unnecessarily.

| Monitoring Aspect | Manual Approach | AI-Driven Observability |

|---|---|---|

| Anomaly Detection | Reactive, After Business Impact | Proactive, Before Issues Surface |

| Validation Process | Scheduled, Sample-Based Checks | Continuous, Comprehensive Monitoring |

| Performance Insights | Manual Query Analysis | Automated Bottleneck Identification |

| Data Governance | Periodic Compliance Audits | Real-Time Policy Enforcement |

| Integration Scope | Limited to Specific Systems | End-to-End Pipeline Coverage |

This approach ensures your analytical foundation remains reliable without constant manual intervention. You gain confidence that your information supports accurate business decisions.

Wrapping Up: Key Takeaways for Data-Driven Success

Armed with this knowledge, you’re ready to transform chaotic information streams into structured intelligence that drives business success.

You now grasp the essential partnership between quantitative measures and descriptive attributes. This relationship forms the backbone of every effective analytical system.

The star schema design elegantly connects these components through foreign keys. This structure enables fast, intuitive queries that deliver immediate insights.

Performance optimization becomes critical when handling massive datasets. Strategic indexing, partitioning, and pre-aggregation ensure your warehouse responds quickly to business demands.

Maintain clear granularity levels and implement regular audits. These practices keep your analytical foundation reliable and accurate over time.

Your next step is clear: apply these concepts to build or refine your own data environment. Transform raw information into the competitive intelligence that powers confident decision-making.